12

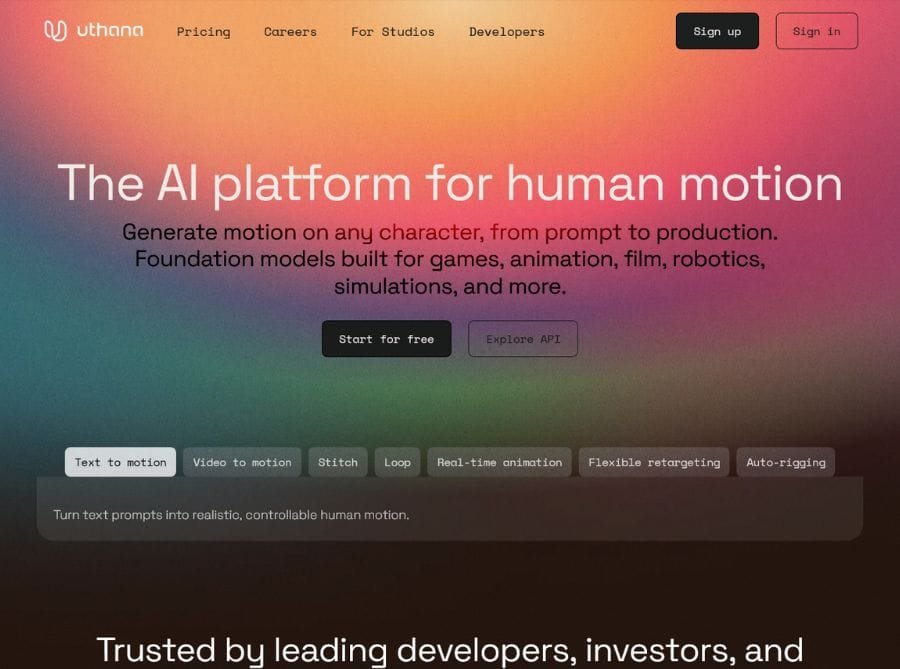

What is Uthana?

Uthana is an AI-powered 3D character animation platform that turns text prompts and 2D videos into realistic, production-ready human motion for games, film, XR, robotics, and simulation. It sits on top of foundation models for human motion, giving studios and solo creators a fast way to go from idea to finished animation without heavy keyframing.

Under the hood, Uthana uses multimodal generative AI plus physics-aware motion synthesis, exposing everything via a simple GraphQL API and SDKs so teams can integrate it directly into their pipelines or tools.

Key Features of Uthana

- Text-to-Motion: Generate 3D character animations from simple text descriptions, ideal for rapid prototyping, previs, and blocking.

- Video-to-Motion: Upload 2D reference videos; Uthana extracts and converts them into 3D motion files you can retarget to any rig.

- Stitching, Blending & Looping: Seamlessly blend and stitch multiple motions with keyframe control; create perfect looping sequences for idles, cycles, and game states.

- Real-Time Control: Drive characters in real time using mouse, keyboard, or gamepad, with millisecond-level latency for interactive experiences.

- Rig-Agnostic IK Retargeting: Proprietary inverse kinematics retargeting works with any biped skeleton, applying motion cleanly regardless of rig hierarchy or proportions.

- Massive Motion Library: Access 10,000–100,000+ studio-quality motion assets searchable via natural language, including detailed data like finger motion.

- Broad DCC & Engine Support: Export and use animations in Maya, Blender, Unreal Engine, Unity and standard formats like FBX/GLB; works with any engine or DCC.

- API & SDK Integration: Simple GraphQL API and SDKs let you trigger text-to-motion, video-to-motion, retargeting, and library search from your own tools or backend.

- Enterprise Workflow & Support: Data siloing, custom model training, style transfer for your game look, ML tools for labeling, and dedicated support for studios.

Practical Use Cases of Uthana Ai

- Game Development: Rapidly prototype and ship character movesets (walks, attacks, emotes) without hand-keying every clip. Apply a consistent game style to generic motions across multiple characters using style transfer and retargeting.

- Film, TV & Cinematics: Previs complex scenes from scripts using text-to-motion, then refine with video-to-motion for key performances.

- AR/VR & Real-Time Experiences: Use real-time control plus loops to drive avatars in XR, live events, interactive installations, and VTuber-style rigs.

- Robotics & Simulation: Generate realistic human motion traces for robotics training, biomechanics simulations, and digital twin scenarios.

- Indie & Mid-Sized Studios: Compete with AAA output by replacing large mocap sessions with AI-generated motion, while still exporting to their existing Maya/Unreal/Unity pipelines.