TINA by Gravitas AI is a powerful, always-on conversational AI chatbot designed to streamline and enhance customer and patient engagement. With the ability to automate appointment scheduling, lead capture, FAQ resolution, and multilingual communication, TINA acts as an intelligent digital assistant tailored to your business needs. It integrates effortlessly with existing CRM systems, ensuring smooth data flow and operational efficiency.

TINA isn’t your average chatbot. It’s a powerful, AI-driven virtual assistant designed to handle real-time conversations with human-like understanding. Whether it’s managing customer queries, automating appointment scheduling, collecting leads, resolving FAQs, or sending reminders—TINA does it all with zero downtime.

What sets TINA apart is its ability to serve a wide range of industries with tailored, industry-specific workflows. From healthcare and pharmaceuticals to real estate, logistics, wellness, finance, education, and e-commerce, TINA seamlessly integrates into your ecosystem, providing end-to-end automation and exceptional user experiences.

Key Features of TINA

- 24/7 Real-Time Chat Support: Engage users anytime with instant responses to common queries and actions.

- Appointment Booking & Management: Automates scheduling, confirmations, rescheduling, and reminders.

- Multilingual Capabilities: Communicate with diverse audiences across regions in multiple languages.

- Lead Generation & Qualification: Captures, qualifies, and pushes leads directly to your CRM or marketing funnel.

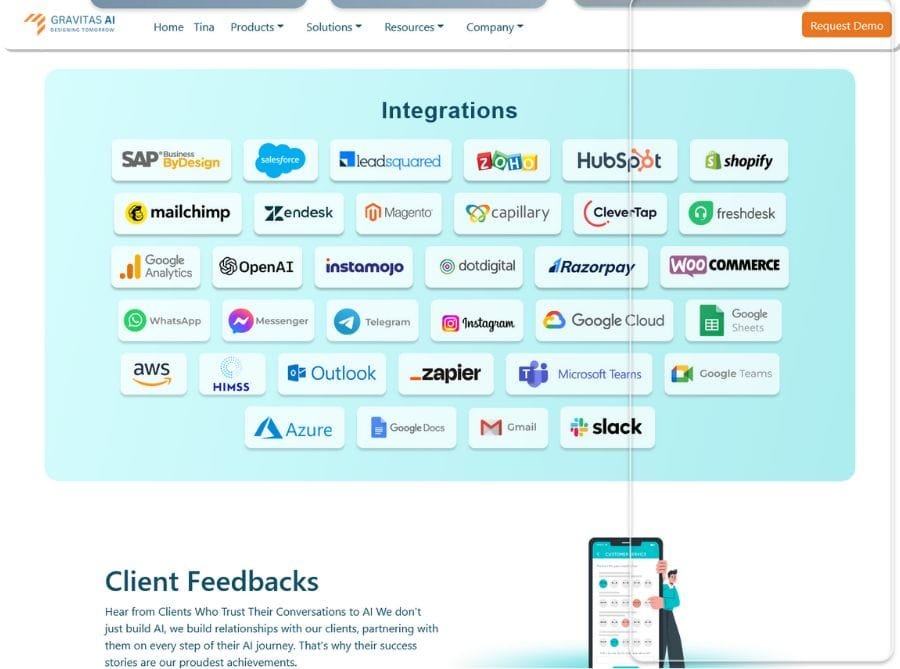

- Seamless CRM Integration: Works smoothly with existing systems to streamline data flow and avoid silos.

- Analytics & Insights: Tracks engagement, interactions, and conversions to provide actionable business intelligence.

- HIPAA/GDPR Compliance: Ensures the highest standards of security and privacy are maintained when handling your data.

Use Cases Across Industries:

- Healthcare & Wellness: TINA answers patient queries, schedules consultations, sends prescription reminders, and ensures better care continuity—reducing staff burden and improving satisfaction.

- Pharma & Diagnostics: Enables product information delivery, customer onboarding, and follow-up automation for HCPs and patients alike.

- Real Estate & Property Management: Handles inquiries, books property visits, and shares details—enhancing lead engagement and conversion rates.

- Logistics & Supply Chain: Provides real-time shipment updates, resolves delivery-related FAQs and improves customer experience.

- Education & EdTech: Assists with student queries, enrollment processes, and course navigation—boosting operational efficiency.

- E-commerce & Retail: Supports order tracking, product recommendations, returns handling, and more—ensuring faster resolution and brand loyalty.

Why Businesses Love TINA

TINA empowers organizations to do more with less. By automating repetitive conversations and routine workflows, it frees up teams to focus on strategic, value-driven tasks. Its AI engine continually learns from interactions, making conversations more contextual, intelligent, and personalized over time.

With TINA, your brand becomes instantly more responsive, efficient, and scalable—without needing an army of support agents. Whether you’re a clinic looking to digitize front-desk interactions or a logistics firm aiming to reduce support tickets, TINA adapts to your needs and grows with you.